(5 min read)

There’s a pattern most organisations recognise.

Year after year, more money goes into cybersecurity technology. New tools, better monitoring, smarter defences, and so the stack grows.

And still, serious incidents keep happening.

Not because the technology failed, but because someone did something entirely understandable. They clicked a convincing link, responded quickly under pressure, trusted a message that looked legitimate, or took a shortcut because they were too busy.

So, the obvious question isn’t whether people matter; we already know they do. The real question is why acting on that knowledge feels so hard.

Cyber incidents are rarely “just technical”

In the UK, 43% of businesses reported a cyber breach or attack in the last 12 months (And those are just the reported ones). Across the EU, more than one in five enterprises reported ICT security incidents in a single year, with some countries reporting much higher rates.

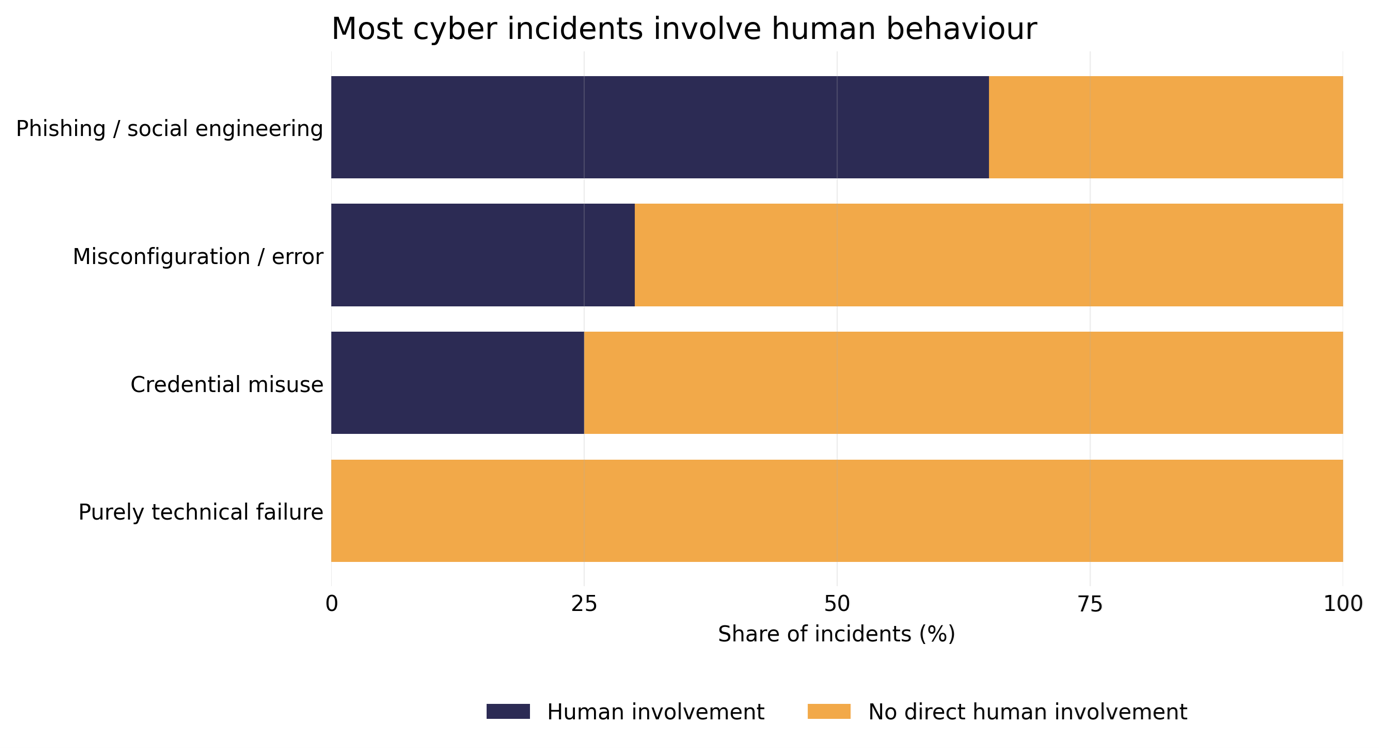

What’s striking isn’t just how often incidents occur, but how they happen.

They rarely come down to a single technical failure. More often, they involve everyday human behaviour, responding too quickly when busy, trusting familiar brands or colleagues, or working around processes that feel slow or unclear.

This isn’t carelessness. It’s how people operate in complex, fast-moving environments.

And it’s exactly why attackers focus on people.

If we know this, why do organisations hesitate?

The hesitation isn’t irrational, it is human. Behavioural science helps explain what’s really going on - here are a few explanations as to why we do this.

Optimism bias. “It won’t happen to us.”

Optimism bias leads people, including experienced leaders, to believe they’re less likely than others to experience negative events, such as a cyber attack.

You hear it in familiar phrases.

“We’ve never had a serious incident.”

“We’re not a high-value target.”

“We’d spot something like that.”

Until it happens.

Attackers rely on this bias, not because leaders are naïve, but because near misses and small errors often go unnoticed or unreported and over time, the absence of visible damage creates a false sense of safety.

Status quo bias. Technology feels safer than change.

Introducing training that genuinely changes behaviour takes time. It requires leadership attention and cultural support, and it asks people to reflect on habits they’ve built up over years.

On the other hand, technology upgrades feel simpler. They’re tangible, contained, and easier to justify.

But while technology can block certain threats, it doesn’t change behaviour. And behaviour is where many incidents begin.

Sticking with what feels familiar isn’t laziness. It’s short-term predictability winning out over long-term resilience.

Social proof. “What is everyone else doing?”

People look to others to decide what’s normal.

If cyber training is treated mainly as a compliance requirement rather than a strategic investment, few organisations want to be the first to do something different. Without visible role models, change and progress is slow.

No one wants to be the outlier.

An outdated mental model. “This is an IT issue.”

Many organisations still frame cybersecurity as a technical problem, however attacker don’t!

Attackers focus on people because people are busy, people trust, people take shortcuts, and people worry about getting things wrong.

When cybersecurity is treated purely as an IT responsibility, the most targeted part of the system ends up under-supported.

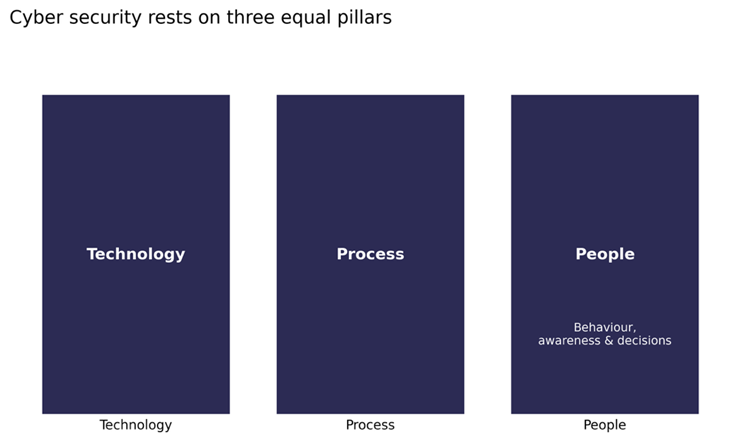

Cybersecurity rests on three equal pillars

Effective cybersecurity depends on technology, process, and people.

When one pillar is weaker, and it’s usually people, overall resilience drops. Even the best tools can’t compensate for habits that haven’t been designed or reinforced.

Most organisations wouldn’t knowingly leave a technical control half-built. Yet this is often what happens on the human side.

(We explore this in more depth in our blog on the People, Process, Technology model.)

What actually helps organisations take the next step

Seeing people as part of the system

Employees aren’t the weakest link. They’re an essential defensive layer.

When people understand threats and feel confident responding, risk reduces. Reporting improves. Incidents are contained earlier.

Designing training around how people actually behave

Traditional awareness training assumes that information leads to behaviour change.

It rarely does. Behavioural science shows change happens through repetition and reinforcement, realistic scenario-based learning, feedback at the moment of decision, and social norms that reward secure behaviour.

This is the foundation Psybersafe is built on.

Creating psychological safety

When people fear blame or embarrassment, mistakes get hidden.

Organisations that encourage early reporting and learning, recover faster and suffer fewer severe incidents. Training works the same way. People engage and participate, when they feel supported, not judged.

Why Psybersafe takes a different approach

Psybersafe is built on behavioural science, not fear, blame, or compliance-driven training.

The approach focuses on behaviour change rather than information overload, reinforces secure habits over time, uses scenarios employees recognise, and supports a culture of learning instead of blame.The goal isn’t perfection. It’s progress that sticks.

Final thought

Investing only in technology while ignoring human behaviour leaves organisations exposed where attackers are strongest.

The next step isn’t buying more tools. It’s empowering people.

And that step is often far easier than most organisations think.

Data and references

UK Cyber Security Breaches Survey 2025.

Eurostat ICT Security Incidents, 2023.

Verizon Data Breach Investigations Report 2025, EMEA edition.

These sources consistently show that cyber incidents are driven more by human decision-making under pressure than by technical failure alone.

A reasonable next step

If you want to explore how behavioural-science-led training can reduce real-world risk with minimal disruption, that’s exactly what Psybersafe was designed to help with.

Contact us today and sign up to our newsletter, packed with hints and tips on how to stay cyber safe.

Mark Brown is a behavioural science expert with significant experience in inspiring organisational and culture change that lasts. If you’d like to chat about using Psybersafe in your business to help to stay cyber secure, contact Mark today.

Mark Brown is a behavioural science expert with significant experience in inspiring organisational and culture change that lasts. If you’d like to chat about using Psybersafe in your business to help to stay cyber secure, contact Mark today.