(6 min read)

How Kahneman's System 1 and System 2 impact cybersecurity behaviour

In the realm of cybersecurity, we know that human behaviour plays a pivotal role in determining an organisation’s resilience to threats.

Understanding how people think and make decisions is essential to creating effective security measures.

Daniel Kahneman's Thinking, Fast and Slow introduces the concepts of System 1 and System 2 thinking, offering valuable insights into how we process information and respond to potential risks. These insights can be leveraged to encourage more secure behaviours and mitigate vulnerabilities.

What is System 1 and System 2 thinking?

System 1 operates automatically and intuitively, accounting for most of our mental processes. It’s fast, instinctive, and effortless—governing behaviours like automatically clicking on a link in an email without much thought.

In contrast, System 2 thinking is slower, deliberate, and effortful. We engage this type of thinking when we face tasks that require focused attention or careful decision-making, such as evaluating an unfamiliar email for signs of phishing.

As Kahneman explains: “System 1 runs the show”. That’s because it is our brain's default mode, handling routine and automatic tasks. Most cybersecurity threats exploit System 1 thinking because of its speed and susceptibility to errors. The challenge for organisations is to implement strategies that mitigate System 1 vulnerabilities while encouraging the activation of System 2 when critical decisions arise.

What are the cybersecurity threats that target System 1?

Cyber attackers understand that people are more likely to react instinctively rather than rationally. Phishing emails, for example, are crafted to trigger fast, emotional responses—fear, urgency, or curiosity. A message that says: “Your account will be suspended unless you update your password immediately,” is designed to trigger System 1 and elicit immediate, impulsive action, bypassing System 2’s critical thinking.

Cyber attackers understand that people are more likely to react instinctively rather than rationally. Phishing emails, for example, are crafted to trigger fast, emotional responses—fear, urgency, or curiosity. A message that says: “Your account will be suspended unless you update your password immediately,” is designed to trigger System 1 and elicit immediate, impulsive action, bypassing System 2’s critical thinking.

These tactics succeed because System 1 relies on heuristics, or mental shortcuts. For instance, we trust familiar brands, respond to authority, and act quickly under pressure. Cyber criminals exploit these tendencies, making it critical for organisations to educate employees on recognising these cues.

Engaging System 2 to strengthen cybersecurity

When designing cyber awareness training or interventions, the tendency is to focus on System 2 – rational, sensible explanations and do’s and don’ts. But that often misses the mark. Recognising that System 1 dominates most decisions, we can still activate System 2 to protect against cyber threats, but in that context. The challenge is creating environments where individuals are encouraged to slow down and think critically. Based on our experience of working with organisations, proven strategies include:

- Awareness and training: Educate employees about cybersecurity threats and how they exploit intuitive thinking. Training can help shift behaviour from automatic to deliberate. But, your training must ensure that your people will then actually act or react appropriately, for example, when encountering suspicious email addresses or urgent demands.

- Cues and prompts: Introduce visual or contextual reminders that trigger System 2 thinking. For instance, a warning pop-up stating, “This link could be unsafe. Are you sure you want to proceed?” encourages reflection before clicking.

- Simplifying security processes: Harness our reliance on System 1 by making secure behaviours easy and automatic. Features like multifactor authentication or default encryption help ensure compliance without requiring active decision-making.

Nudging behaviour with science

When we are designing our online cybersecurity training, we use in depth models of behaviour like the COM-B model (Michie et al, 2011), but also insights from Kahneman’s work, alongside Dr. Robert Cialdini’s principles of persuasion. By using principles such as authority (e.g., endorsements from cybersecurity experts) and social proof (e.g., highlighting peers’ secure behaviours), organisations can guide intuitive decisions toward safer outcomes.

For example, an internal campaign showcasing that “85% of employees regularly report suspicious emails” taps into social proof, aligning System 1’s heuristic-driven decision-making with positive cybersecurity behaviour (as long as it is true of course). This encourages others to adopt the correct, socially acceptable, behaviour. Similarly, communications that emphasise the endorsement of security protocols by trusted leaders within the company can build credibility and encourage compliance.

Engaging System 1 to strengthen cybersecurity

Engaging System 1 to strengthen cybersecurity

While System 1 is often seen as a vulnerability in cybersecurity due to its reliance on heuristics and quick judgments, it can also be leveraged to create safer behaviours. The key is to align security measures with System 1’s automatic and intuitive processes, making secure behaviours effortless and instinctive.

Practical strategies for engaging System 1 include:

- Visual cues for immediate recognition

-

- Use visual indicators like padlock icons for secure websites or colour-coded warnings (e.g., red for danger, green for safe) to trigger immediate, intuitive responses.

-

- For phishing detection, flag emails with external senders or potential risks by highlighting them in bold, distinct colours to draw attention automatically.

- Default security settings

-

- Set security features, such as multifactor authentication (MFA), as the default option. This removes the need for users to actively opt-in and ensures secure practices are followed without additional effort.

-

- Enable auto-locking for devices after a brief period of inactivity to prevent unauthorised access.

- Streamlined password management

-

- Provide employees with a company-approved password manager to generate and store strong passwords. This eliminates the cognitive load of remembering complex passwords, aligning with System 1’s preference for ease and simplicity. This approach encourages employees to use longer, more complex, and safer passwords.

- Behavioural nudges in workflows

-

- Add gentle nudges, such as pop-ups that say, “Are you sure this email is from a trusted source?” before allowing users to open attachments or click links. These prompts briefly engage System 2 to pause and consider potential risks.

-

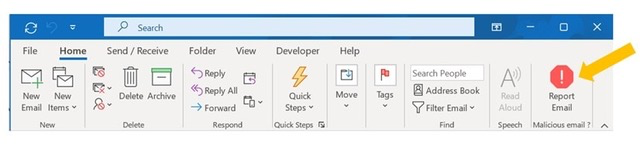

- Use pre-written email templates or a ‘Reporting’ button in the email client for employees to report suspicious emails quickly and effortlessly, encouraging proactive security behaviours.

- Gamified security training

-

- Introduce engaging, game-like simulations to teach employees about cybersecurity threats. For example, if you run simulated phishing exercises make sure you reward users who identify threats correctly, reinforcing secure behaviour through immediate feedback and positive reinforcement.

-

- Training like Psybersafe’s online training, which uses interactive, short, monthly episodes, are very effective in embedding better security habits and behaviours, training and coaching employees to adopt safer behaviours.

- Trust signals for immediate reassurance

-

- Include clear trust signals, such as verified badges or organisational branding, in communications to reinforce authenticity and reduce the likelihood of employees falling for phishing attempts.

By embedding these practical strategies into an organisation’s cybersecurity framework, businesses can align security measures with System 1’s intuitive nature. This reduces reliance on effortful decision-making (System 2) while promoting secure behaviours as a seamless, automatic part of daily routines.

Conclusion

Understanding System 1 and System 2 thinking isn’t just a theoretical exercise—it’s a practical tool for designing more effective cybersecurity strategies. Recognising that most decisions are made intuitively allows organisations to craft interventions that align with natural human tendencies, while nudging individuals toward safer actions.

We quoted Kahneman earlier in this article: “System 1 runs the show.” In cybersecurity, the key to success lies in respecting this truth and working with System 1, while also finding ways to activate System 2 when it matters most. Through training, thoughtful design, and behavioural nudges, organisations can turn instinctive reactions into deliberate, secure actions—protecting individuals and data alike.

References:

Cialdini, R. B. (2007). Influence: The Psychology of Persuasion. New York: Harper Collins.

Kahneman, Daniel (2011) Thinking, fast and slow. New York: Farrar, Straus and Giroux.

Michie, S., van Stralen, M.M. & West, R. The behaviour change wheel: A new method for characterising and designing behaviour change interventions. Implementation Sci 6, 42 (2011). https://doi.org/10.1186/1748-5908-6-42

We love behavioural science. We’ve studied it and we know it works. Why not contact us at Dit e-mailadres wordt beveiligd tegen spambots. JavaScript dient ingeschakeld te zijn om het te bekijken. to see how we can help your organisation gain better, long-term cyber security habits? If you want to know more about the science of persuasion and influence and behavioural science in general have a look at our sister site https://influenceinaction.co.uk/

Sign up to get our monthly newsletter, packed with hints and tips on how to stay cyber safe.

Mark Brown is a behavioural science expert with significant experience in inspiring organisational and culture change that lasts. If you’d like to chat about using Psybersafe in your business to help to stay cyber secure, contact Mark today.

Mark Brown is a behavioural science expert with significant experience in inspiring organisational and culture change that lasts. If you’d like to chat about using Psybersafe in your business to help to stay cyber secure, contact Mark today.